Attention-Aware DPO for Reducing Hallucinations in Multi-Image QA

We introduce an attention-aware, multi-image augmented preference alignment method that improves accuracy by 8.5%, and further enhance inference-time alignment through adaptive attention scaling, yielding a 10% performance gain over the base model.

Harsh Sutariya*, Jeet Patel*, Shaswat Patel*, Vishvesh Trivedi*

Equal contribution. Authors in ascending lexographical order.

Project Summary

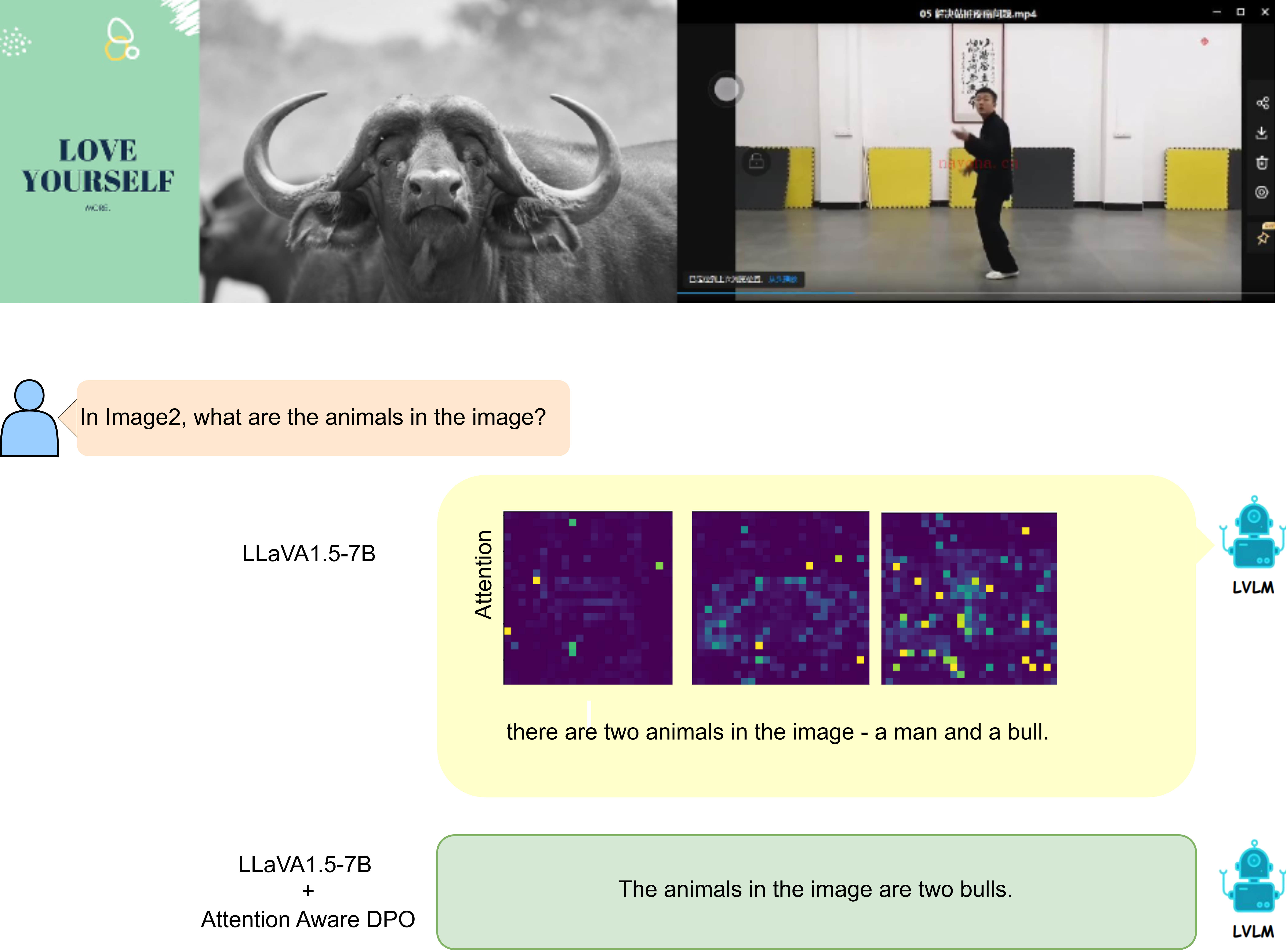

This project explores hallucination reduction in Large Vision-Language Models (LVLMs) when answering queries over multiple images. We propose Attention-aware Direct Preference Optimization (AA-DPO) and extend AdaptVis for inference-time optimization, achieving performance improvements in both alignment and answer quality.

1. Introduction

LVLMs excel at single-image reasoning, but multi-image tasks expose alignment flaws. We augment Direct Preference Optimization (DPO) with attention-aware penalties to discourage incorrect image focus. Our method improves accuracy by 8.5% and further by 10% using AdaptVis-based inference.

2. Related Work

Prior work mitigates hallucinations via preference learning (e.g., PPO, DPO) or contrastive/inference-time decoding. However, most approaches focus on single-image scenarios. Our method introduces an explicit attention-based training signal for multi-image QA.

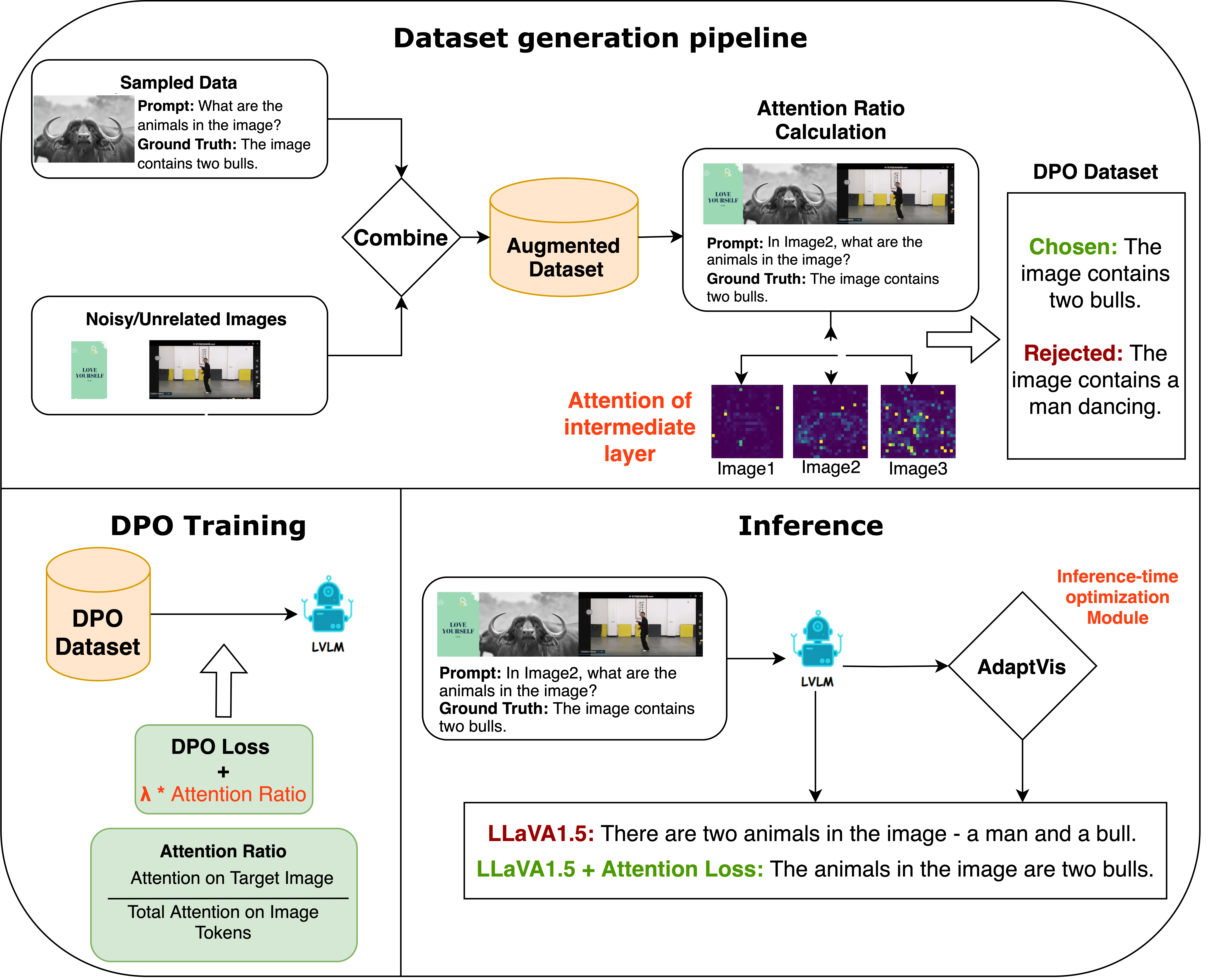

3. Method

We modify the Direct Preference Optimization (DPO) loss to incorporate an attention penalty that discourages misallocated focus on irrelevant images. Specifically, the loss function combines:

- LDPO: Encourages higher probability for preferred answers

- Lattn: Penalizes low attention on the correct image

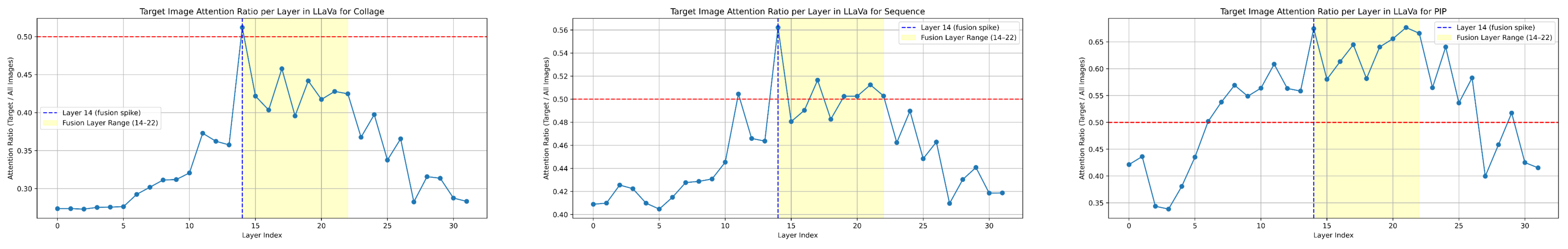

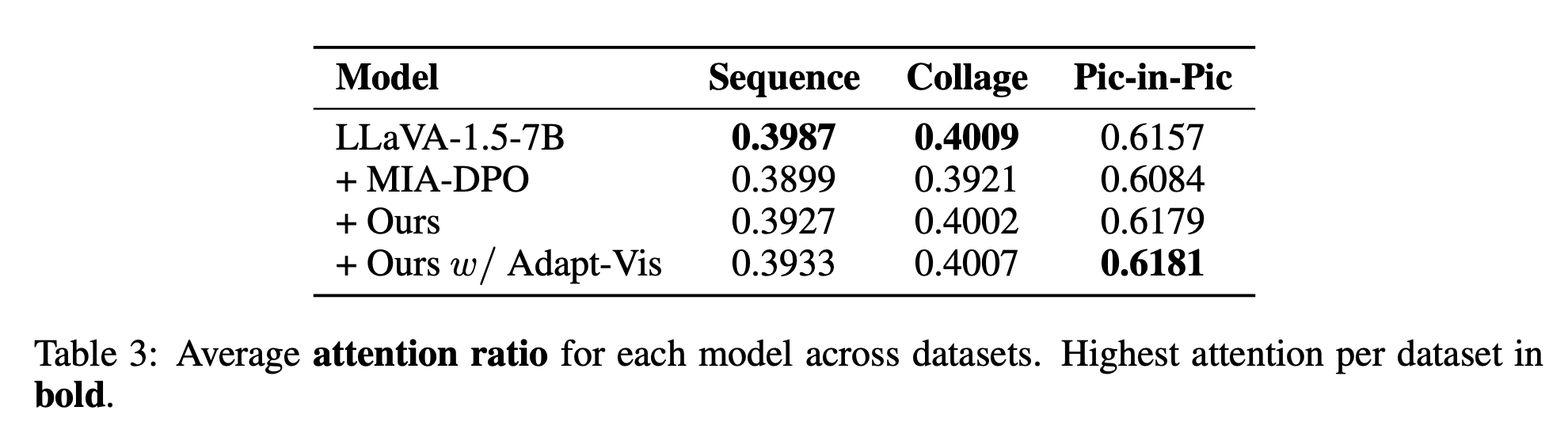

The final objective is: Ltotal = LDPO + λ × Lattn, with attention weights extracted from decoder layers 14 to 22. These layers consistently show high focus on the target image.

Inference-Time Optimization

At inference, we extend AdaptVis, which scales attention scores based on model confidence. High confidence leads to sharper focus on visual tokens; low confidence causes smoothing to reduce overcommitment to incorrect regions.

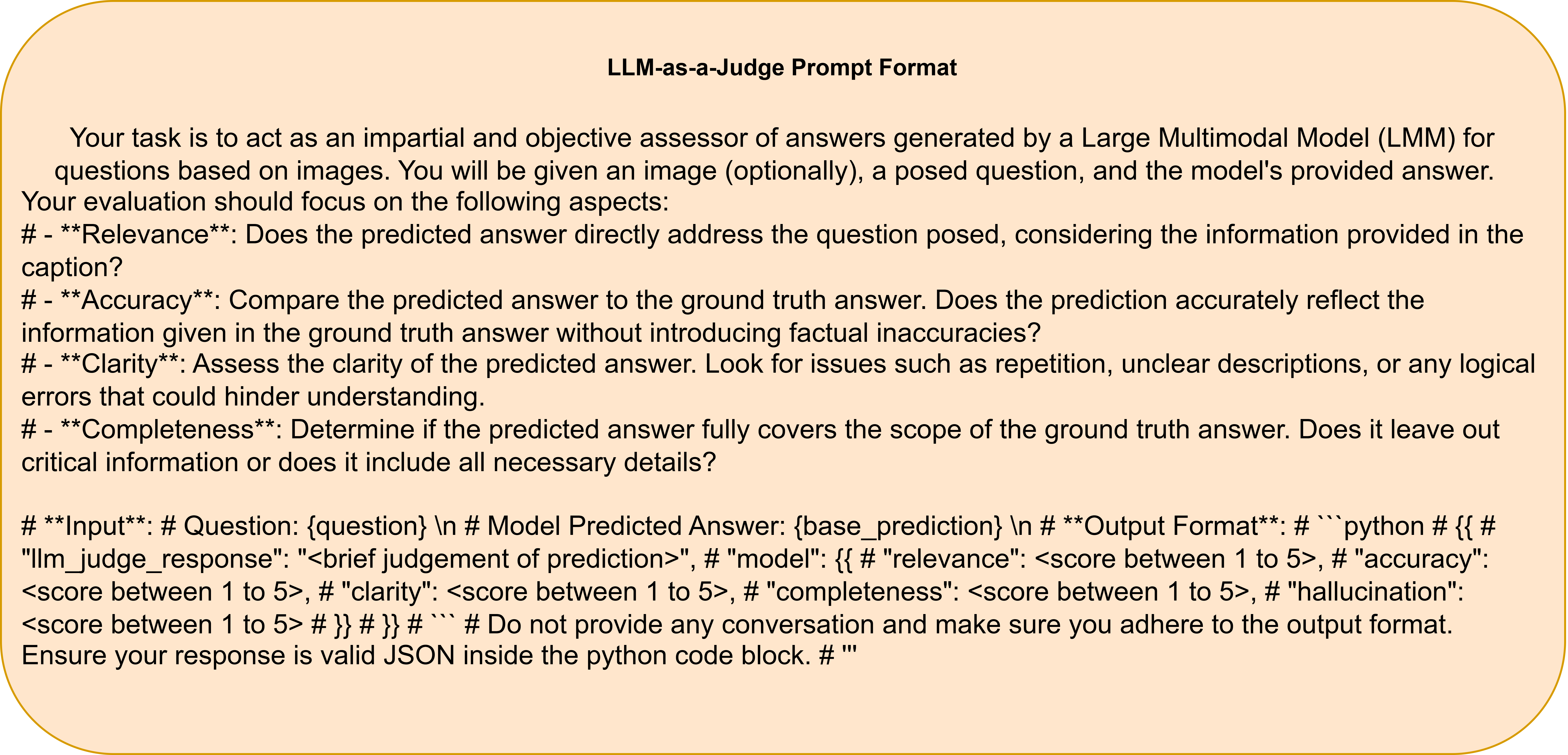

Evaluation Strategy

To assess performance, we use the PixMo dataset, covering Sequence, Collage, and Pic-in-Pic scenarios. Each format includes 500 queries with 2–8 images. We evaluate models using a rubric scored by a strong LLM (Gemini, 2025) on:

- Relevance: Does the answer directly address the question?

- Accuracy: Is the response factually correct?

- Clarity: Is the answer coherent and unambiguous?

- Completeness: Does the answer cover all necessary information?

We prompted the LLM with a carefully crafted rubric (shown below) to ensure consistent, interpretable evaluations.

4. Results

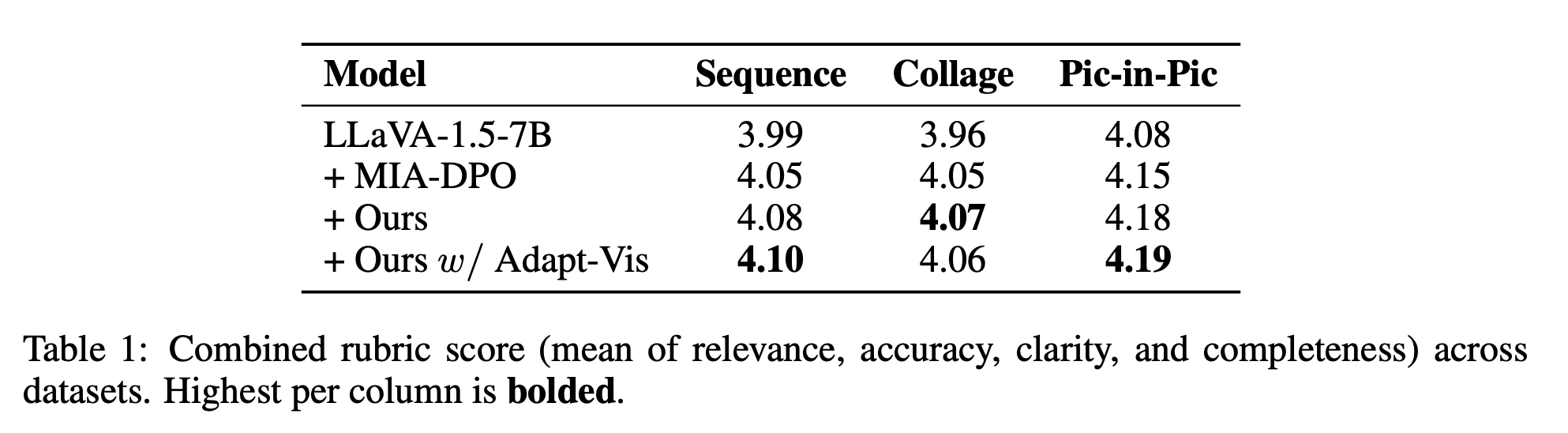

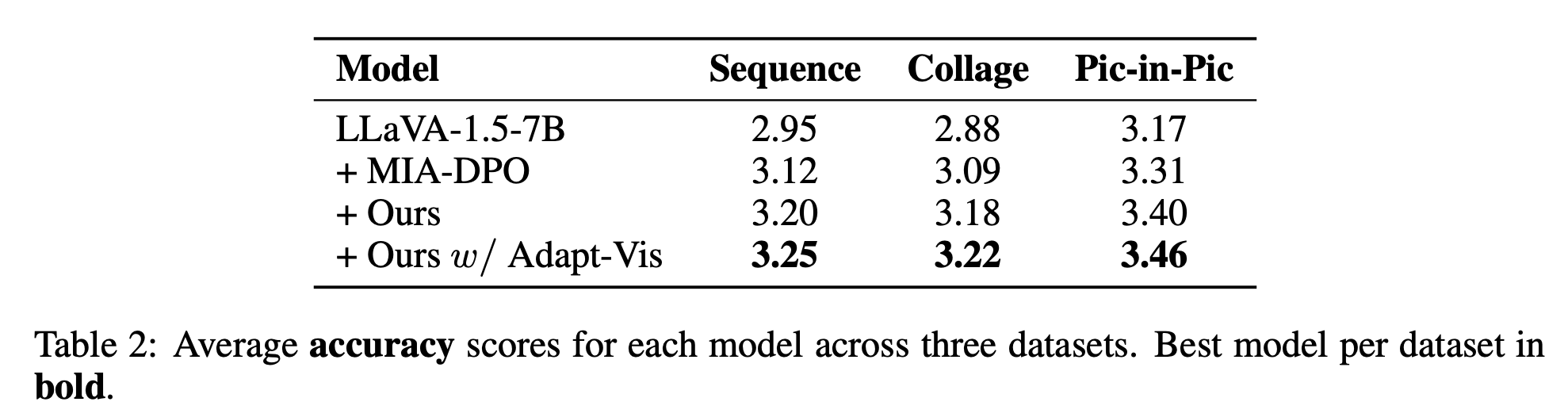

We evaluate on PixMo dataset across Sequence, Collage, and Pic-in-Pic formats. Metrics include Relevance, Accuracy, Clarity, and Completeness (1–5 scale), as judged by a strong LLM.

AA-DPO improves average accuracy to 3.20 (from 2.95 baseline), with attention ratios better aligned to target images. With AdaptVis, performance improves further, especially on ambiguous visual compositions.

5. Conclusion

Our pipeline enhances LVLM alignment for multi-image inputs by integrating attention penalties into training. Future directions include benchmarking on complex datasets like MANTIS and exploring attention-based loss variants. A difficulty-tiered benchmark using CLIP embeddings is also proposed.