Do retrieval heads speak the same language?

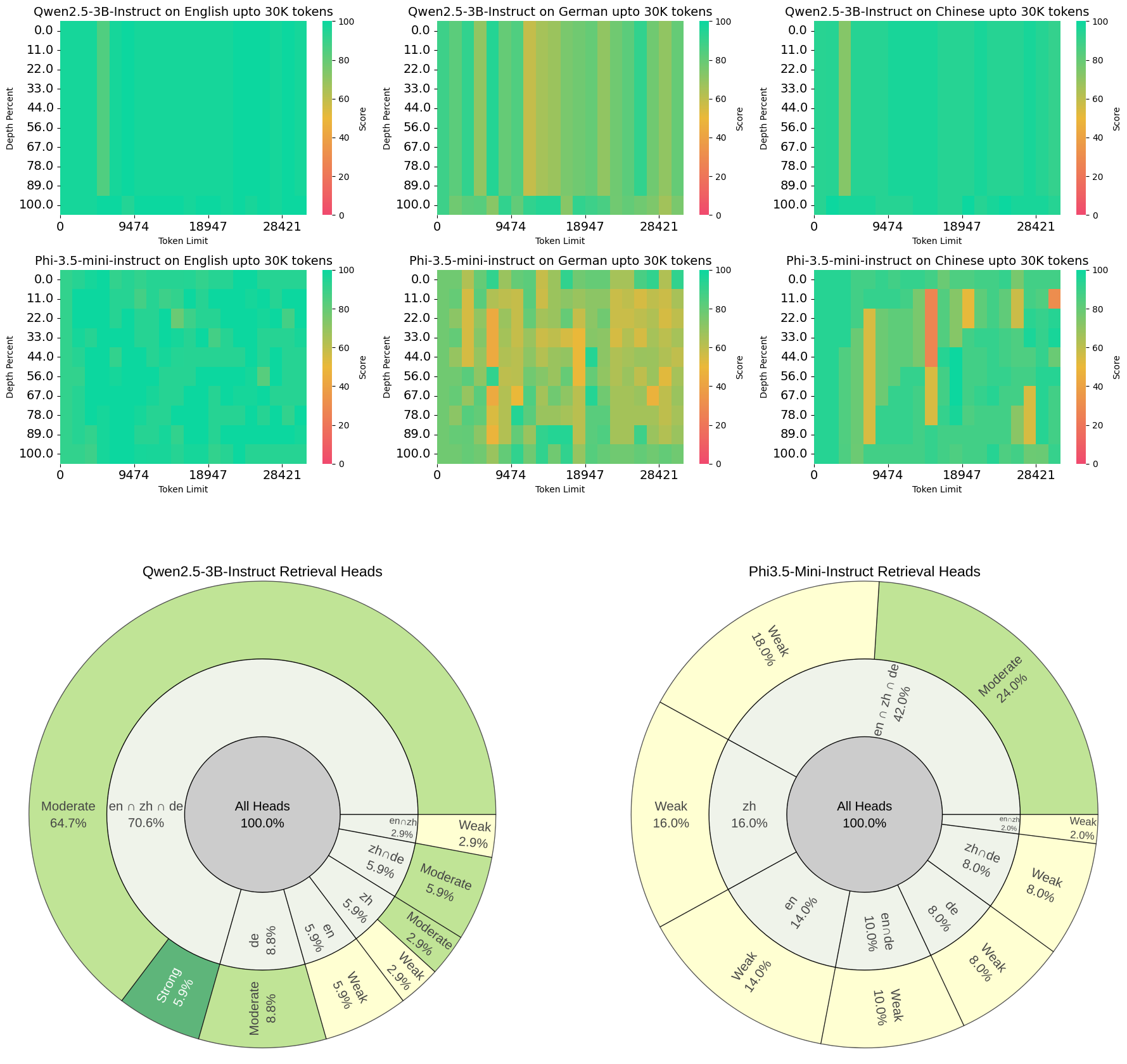

This project analyzes retrieval heads in multilingual LLMs using Needle-in-a-Haystack tasks across English, German, and Chinese. We find that strong retrieval heads are largely language-agnostic and critical for performance. Masking them leads to significant accuracy drops, offering insights for optimizing KV caching and multilingual model efficiency.

Work under preparation. Preprint and code releasing soon.

Teaser